For those of you who are not in the security space and may not have read the Cloud Security Alliance’s “Guidance for Critical Areas of Focus,” you may have missed the “Cloud Architectural Framework” section I wrote as a contribution.

For those of you who are not in the security space and may not have read the Cloud Security Alliance’s “Guidance for Critical Areas of Focus,” you may have missed the “Cloud Architectural Framework” section I wrote as a contribution.

We are working on improving the entire guide, but I thought I would re-publish the Cloud Architectural Framework section and solicit comments here as well as “set it free” as a stand-alone reference document.

Please keep in mind, I wrote this before many of the other papers such as NIST’s were officially published, so the normal churn in the blogosphere and general Cloud space may mean that some of the terms and definitions have settled down.

I hope it proves useful, even in its current form (I have many updates to make as part of the v2 Guidance document.)

/Hoff

Problem Statement

Cloud Computing (“Cloud”) is a catch-all term that describes the evolutionary development of many existing technologies and approaches to computing that at its most basic, separates application and information resources from the underlying infrastructure and mechanisms used to deliver them with the addition of elastic scale and the utility model of allocation. Cloud computing enhances collaboration, agility, scale, availability and provides the potential for cost reduction through optimized and efficient computing.

More specifically, Cloud describes the use of a collection of distributed services, applications, information and infrastructure comprised of pools of compute, network, information and storage resources. These components can be rapidly orchestrated, provisioned, implemented and decommissioned using an on-demand utility-like model of allocation and consumption. Cloud services are most often, but not always, utilized in conjunction with and enabled by virtualization technologies to provide dynamic integration, provisioning, orchestration, mobility and scale.

While the very definition of Cloud suggests the decoupling of resources from the physical affinity to and location of the infrastructure that delivers them, many descriptions of Cloud go to one extreme or another by either exaggerating or artificially limiting the many attributes of Cloud. This is often purposely done in an attempt to inflate or marginalize its scope. Some examples include the suggestions that for a service to be Cloud-based, that the Internet must be used as a transport, a web browser must be used as an access modality or that the resources are always shared in a multi-tenant environment outside of the “perimeter.” What is missing in these definitions is context.

From an architectural perspective given this abstracted evolution of technology, there is much confusion surrounding how Cloud is both similar and differs from existing models and how these similarities and differences might impact the organizational, operational and technological approaches to Cloud adoption as it relates to traditional network and information security practices. There are those who say Cloud is a novel sea-change and technical revolution while others suggest it is a natural evolution and coalescence of technology, economy, and culture. The truth is somewhere in between.

There are many models available today which attempt to address Cloud from the perspective of academicians, architects, engineers, developers, managers and even consumers. We will focus on a model and methodology that is specifically tailored to the unique perspectives of IT network and security professionals.

The keys to understanding how Cloud architecture impacts security architecture are a common and concise lexicon coupled with a consistent taxonomy of offerings by which Cloud services and architecture can be deconstructed, mapped to a model of compensating security and operational controls, risk assessment and management frameworks and in turn, compliance standards.

Setting the Context: Cloud Computing Defined

Understanding how Cloud Computing architecture impacts security architecture requires an understanding of Cloud’s principal characteristics, the manner in which cloud providers deliver and deploy services, how they are consumed, and ultimately how they need to be safeguarded.

The scope of this area of focus is not to define the specific security benefits or challenges presented by Cloud Computing as these are covered in depth in the other 14 domains of concern:

- Information lifecycle management

- Governance and Enterprise Risk Management

- Compliance & Audit

- General Legal

- eDiscovery

- Encryption and Key Management

- Identity and Access Management

- Storage

- Virtualization

- Application Security

- Portability & Interoperability

- Data Center Operations Management

- Incident Response, Notification, Remediation

- “Traditional” Security impact (business continuity, disaster recovery, physical security)

We will discuss the various approaches and derivative offerings of Cloud and how they impact security from an architectural perspective using an in-process model developed as a community effort associated with the Cloud Security Alliance.

Principal Characteristics of Cloud Computing

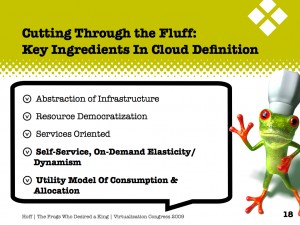

Cloud services are based upon five principal characteristics that demonstrate their relation to, and differences from, traditional computing approaches:

- Abstraction of Infrastructure

The compute, network and storage infrastructure resources are abstracted from the application and information resources as a function of service delivery. Where and by what physical resource that data is processed, transmitted and stored on becomes largely opaque from the perspective of an application or services’ ability to deliver it. Infrastructure resources are generally pooled in order to deliver service regardless of the tenancy model employed – shared or dedicated. This abstraction is generally provided by means of high levels of virtualization at the chipset and operating system levels or enabled at the higher levels by heavily customized filesystems, operating systems or communication protocols.

- Resource Democratization

The abstraction of infrastructure yields the notion of resource democratization – whether infrastructure, applications, or information – and provides the capability for pooled resources to be made available and accessible to anyone or anything authorized to utilize them using standardized methods for doing so.

- Services Oriented Architecture

As the abstraction of infrastructure from application and information yields well-defined and loosely-coupled resource democratization, the notion of utilizing these components in whole or part, alone or with integration, provides a services oriented architecture where resources may be accessed and utilized in a standard way. In this model, the focus is on the delivery of service and not the management of infrastructure.

- Elasticity/Dynamism

The on-demand model of Cloud provisioning coupled with high levels of automation, virtualization, and ubiquitous, reliable and high-speed connectivity provides for the capability to rapidly expand or contract resource allocation to service definition and requirements using a self-service model that scales to as-needed capacity. Since resources are pooled, better utilization and service levels can be achieved.

- Utility Model Of Consumption & Allocation

The abstracted, democratized, service-oriented and elastic nature of Cloud combined with tight automation, orchestration, provisioning and self-service then allows for dynamic allocation of resources based on any number of governing input parameters. Given the visibility at an atomic level, the consumption of resources can then be used to provide an “all-you-can-eat” but “pay-by-the-bite” metered utility-cost and usage model. This facilitates greater cost efficiencies and scale as well as manageable and predictive costs.

Cloud Service Delivery Models

Three archetypal models and the derivative combinations thereof generally describe cloud service delivery. The three individual models are often referred to as the “SPI Model,” where “SPI” refers to Software, Platform and Infrastructure (as a service) respectively and are defined thusly[1]:

- Software as a Service (SaaS)

The capability provided to the consumer is to use the provider’s applications running on a cloud infrastructure and accessible from various client devices through a thin client interface such as a Web browser (e.g., web-based email). The consumer does not manage or control the underlying cloud infrastructure, network, servers, operating systems, storage, or even individual application capabilities, with the possible exception of limited user-specific application configuration settings.

- Platform as a Service (PaaS)

The capability provided to the consumer is to deploy onto the cloud infrastructure consumer-created applications using programming languages and tools supported by the provider (e.g., java, python, .Net). The consumer does not manage or control the underlying cloud infrastructure, network, servers, operating systems, or storage, but the consumer has control over the deployed applications and possibly application hosting environment configurations.

- Infrastructure as a Service (IaaS)

The capability provided to the consumer is to rent processing, storage, networks, and other fundamental computing resources where the consumer is able to deploy and run arbitrary software, which can include operating systems and applications. The consumer does not manage or control the underlying cloud infrastructure but has control over operating systems, storage, deployed applications, and possibly select networking components (e.g., firewalls, load balancers).

Understanding the relationship and dependencies between these models is critical. IaaS is the foundation of all Cloud services with PaaS building upon IaaS, and SaaS – in turn – building upon PaaS. We will cover this in more detail later in the document.

The OpenCrowd Cloud Solutions Taxonomy shown in Figure 1 provides an excellent reference that demonstrates the swelling ranks of solutions available today in each of the models above.

Narrowing the scope or specific capabilities and functionality within each of the *aaS offerings or employing the functional coupling of services and capabilities across them may yield derivative classifications. For example “Storage as a Service” is a specific sub-offering with the IaaS “family,” “Database as a Service” may be seen as a derivative of PaaS, etc.

Each of these models yields significant trade-offs in the areas of integrated features, openness (extensibility) and security. We will address these later in the document.

Figure 1 - The OpenCrowd Cloud Taxonomy

Cloud Service Deployment and Consumption Modalities

Regardless of the delivery model utilized (SaaS, PaaS, IaaS,) there are four primary ways in which Cloud services are deployed and are characterized:

- Private

Private Clouds are provided by an organization or their designated service provider and offer a single-tenant (dedicated) operating environment with all the benefits and functionality of elasticity and the accountability/utility model of Cloud.The physical infrastructure may be owned by and/or physically located in the organization’s datacenters (on-premise) or that of a designated service provider (off-premise) with an extension of management and security control planes controlled by the organization or designated service provider respectively.

The consumers of the service are considered “trusted.” Trusted consumers of service are those who are considered part of an organization’s legal/contractual

umbrella including employees, contractors, & business partners. Untrusted consumers are those that may be authorized to consume some/all services but are not logical extensions of the organization.

- Public

Public Clouds are provided by a designated service provider and may offer either a single-tenant (dedicated) or multi-tenant (shared) operating environment with all the benefits and functionality of elasticity and the accountability/utility model of Cloud.

The physical infrastructure is generally owned by and managed by the designated service provider and located within the provider’s datacenters (off-premise.) Consumers of Public Cloud services are considered to be untrusted.

- Managed

Managed Clouds are provided by a designated service provider and may offer either a single-tenant (dedicated) or multi-tenant (shared) operating environment with all the benefits and functionality of elasticity and the accountability/utility model of Cloud.The physical infrastructure is owned by and/or physically located in the organization’s datacenters with an extension of management and security control planes controlled by the designated service provider. Consumers of Managed Clouds may be trusted or untrusted.

- Hybrid

Hybrid Clouds are a combination of public and private cloud offerings that allow for transitive information exchange and possibly application compatibility and portability across disparate Cloud service offerings and providers utilizing standard or proprietary methodologies regardless of ownership or location. This model provides for an extension of management and security control planes. Consumers of Hybrid Clouds may be trusted or untrusted.

The difficulty in using a single label to describe an entire service/offering is that it actually attempts to describe the following elements:

- Who manages it

- Who owns it

- Where it’s located

- Who has access to it

- How it’s accessed

The notion of Public, Private, Managed and Hybrid when describing Cloud services really denotes the attribution of management and the availability of service to specific consumers of the service.

It is important to note that often the characterizations that describe how Cloud services are deployed are often used interchangeably with the notion of where they are provided; as such, you may often see public and private clouds referred to as “external” or “internal” clouds. This can be very confusing.

The manner in which Cloud services are offered and ultimately consumed is then often described relative to the location of the asset/resource/service owner’s management or security “perimeter” which is usually defined by the presence of a “firewall.”

While it is important to understand where within the context of an enforceable security boundary an asset lives, the problem with interchanging or substituting these definitions is that the notion of a well-demarcated perimeter separating the “outside” from the “inside” is an anachronistic concept.

It is clear that the impact of the re-perimeterization and the erosion of trust boundaries we have seen in the enterprise is amplified and accelerated due to Cloud. This is thanks to ubiquitous connectivity provided to devices, the amorphous nature of information interchange, the ineffectiveness of traditional static security controls which cannot deal with the dynamic nature of Cloud services and the mobility and velocity at which Cloud services operate.

Thus the deployment and consumption modalities of Cloud should be thought of not only within the construct of “internal” or “external” as it relates to asset/resource/service physical location, but also by whom they are being consumed and who is responsible for their governance, security and compliance to policies and standards.

This is not to suggest that the on- or off-premise location of an asset/resource/information does not affect the security and risk posture of an organization, because it does, but it also depends upon the following:

- The types of application/information/services being managed

- Who manages them and how

- How controls are integrated

- Regulatory issues

Table 1 illustrates the summarization of these points:

Table 1 - Cloud Computing Service Deployment

As an example, one could classify a service as IaaS/Public/External (Amazon’s AWS/EC2 offering is a good example) as well as SaaS/Managed/Internal (an internally-hosted, but third party-managed custom SaaS stack using Eucalyptus, as an example.)

Thus when assessing the impact a particular Cloud service may have on one’s security posture and overall security architecture, it is necessary to classify the asset/resource/service within the context of not only its location but also its criticality and business impact as it relates to management and security. This means that an appropriate level of risk assessment is performed prior to entrusting it to the vagaries of “The Cloud.”

Which Cloud service deployment and consumption model is used depends upon the nature of the service and the requirements that govern it. As we demonstrate later in this document, there are significant trade-offs in each of the models in terms of integrated features, extensibility, cost, administrative involvement and security.

Figure 2 - Cloud Reference Model

It is therefore important to be able to classify a Cloud service quickly and accurately and compare it to a reference model that is familiar to an IT networking or security professional.

Reference models such as that shown in Figure 2 allows one to visualize the boundaries of *aaS definitions, how and where a particular Cloud service fits, and also how the discrete *aaS models align and interact with one another. This is presented in an OSI-like layered structure with which security and network professionals should be familiar.

Considering each of the *aaS models as a self-contained “solution stack” of integrated functionality with IaaS providing the foundation, it becomes clear that the other two models – PaaS and SaaS – in turn build upon it.

Each of the abstract layers in the reference model represents elements which when combined, comprise the services offerings in each class.

IaaS includes the entire infrastructure resource stack from the facilities to the hardware platforms that reside in them. Further, IaaS incorporates the capability to abstract resources (or not) as well as deliver physical and logical connectivity to those resources. Ultimately, IaaS provides a set of API’s which allows for management and other forms of interaction with the infrastructure by the consumer of the service.

Amazon’s AWS Elastic Compute Cloud (EC2) is a good example of an IaaS offering.

PaaS sits atop IaaS and adds an additional layer of integration with application development frameworks, middleware capabilities and functions such as database, messaging, and queuing that allows developers to build applications which are coupled to the platform and whose programming languages and tools are supported by the stack. Google’s AppEngine is a good example of PaaS.

SaaS in turn is built upon the underlying IaaS and PaaS stacks and provides a self-contained operating environment used to deliver the entire user experience including the content, how it is presented, the application(s) and management capabilities.

SalesForce.com is a good example of SaaS.

It should therefore be clear that there are significant trade-offs in each of the models in terms of features, openness (extensibility) and security.

Figure 3 - Trade-off’s Across *aaS Offerings

Figure 3 demonstrates the interplay and trade-offs between the three *aaS models:

- Generally, SaaS provides a large amount of integrated features built directly into the offering with the least amount of extensibility and a relatively high level of security.

- PaaS generally offers less integrated features since it is designed to enable developers to build their own applications on top of the platform and is therefore more extensible than SaaS by nature, but due to this balance trades off on security features and capabilities.

- IaaS provides few, if any, application-like features, provides for enormous extensibility but generally less security capabilities and functionality beyond protecting the infrastructure itself since it expects operating systems, applications and content to be managed and secured by the consumer.

The key takeaway from a security architecture perspective in comparing these models is that the lower down the stack the Cloud service provider stops, the more security capabilities and management the consumer is responsible for implementing and managing themselves.

This is critical because once a Cloud service can be classified and referenced against the model, mapping the security architecture, business and regulatory or other compliance requirements against it becomes a gap-analysis exercise to determine the general “security” posture of a service and how it relates to the assurance and protection requirements of an asset.

Figure 4 below shows an example of how mapping a Cloud service can be compared to a catalog of compensating controls to determine what existing controls exist and which do not as provided by either the consumer, the Cloud service provider or another third party.

- Figure 4 – Mapping the Cloud Model to the Security Model

Once this gap analysis is complete as governed by the requirements of any regulatory or other compliance mandates, it becomes much easier to determine what needs to be done in order to feed back into a risk assessment framework to determine how the gaps and ultimately how the risk should be addressed: accept, transfer, mitigate or ignore.

Conclusion

Understanding how architecture, technology, process and human capital requirements change or remain the same when deploying Cloud Computing services is critical. Without a clear understanding of the higher-level architectural implications of Cloud services, it is impossible to address more detailed issues in a rational way.

The keys to understanding how Cloud architecture impacts security architecture are a common and concise lexicon coupled with a consistent taxonomy of offerings by which Cloud services and architecture can be deconstructed, mapped to a model of compensating security and operational controls, risk assessment and management frameworks and in turn, compliance standards.

[1] Credit: Peter M. Mell, NIST

There I was in the middle of a half moon yoga pose when the thought hit…

There I was in the middle of a half moon yoga pose when the thought hit… For those of you who are not in the security space and may not have read the

For those of you who are not in the security space and may not have read the

Recent Comments